Los ingenieros de Nissan se han inspirado en el reino animal para desarrollar las nuevas tecnologías que determinarán el futuro de la movilidad. Uno de los objetivos a largo plazo del departamento de I+D de Nissan es reducir al mínimo los accidentes.

La pretensión es que la cifra tienda a cero con el paso de los años. Toru Futami, Director de Tecnología e Investigación Avanzada, sostiene que el estudio del comportamiento de los animales que se mueven en grupo ayuda a los ingenieros a entender cómo los vehículos pueden interactuar unos con otros para lograr un entorno de conducción más seguro y eficiente.

"En nuestra búsqueda constante para desarrollar sistemas anticolisión para la próxima generación de automóviles, necesitamos inspirarnos en la madre naturaleza para encontrar la respuesta más adecuada. En este momento, la investigación se centra en los patrones de comportamiento de los peces".

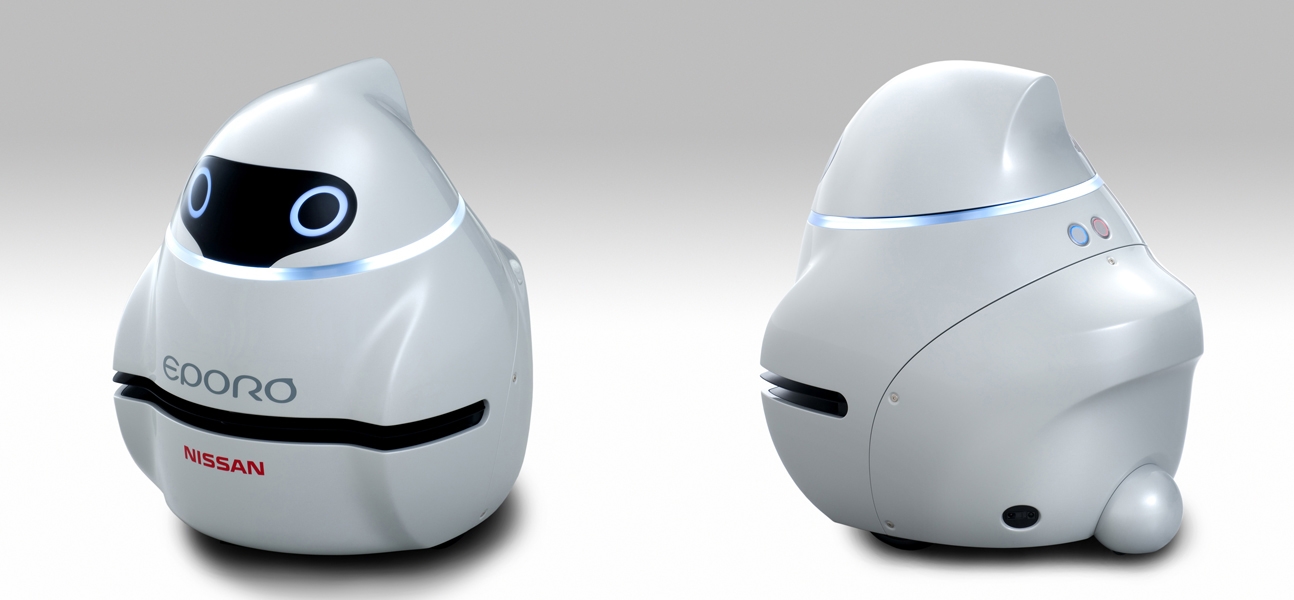

El equipo de investigación ha creado el EPORO (EPisodio 0 RObot), utilizando la tecnología láser LRF (Laser Range Finder) -inspirada en los ojos compuestos de las abejas, cuyo campo de visión abarca más de 300 grados-, junto con otras tecnologías avanzadas. Seis unidades del robot EPORO se comunican entre sí para controlar sus posiciones. El objetivo es doble: evitar colisiones y ser capaces de viajar de lado a lado o en una sola dirección, del mismo modo que lo hacen los peces cuando se mueven bajo el agua agrupados en bancos.

"En las leyes de tráfico actuales se supone que los coches conducen dentro de los carriles y obedecen a señales viales por orden del conductor, pero si todos los coches fueran autónomos, la necesidad de carriles e incluso señales podría desaparecer. Hablábamos antes sobre los peces, y el pez sigue estas tres reglas: no te vayas demasiado lejos, no te pongas demasiado cerca y no golpees a los demás. Un banco de peces no tiene líneas que le ayude a guiarse, pero sus integrantes se las arreglan para nadar muy cerca los unos de los otros. Así que si los coches pudieran comportarse en grupo de la misma manera y de forma autónoma, deberíamos ser capaces de tener más vehículos funcionando al mismo tiempo sin necesidad de aumentar el ancho de las carreteras. Esto solucionaría las congestiones de tráfico", explica Futami.

Futami añade que el robot también tiene la capacidad de comunicarse con sus semejantes en una intersección, de manera que puede tomar la decisión de cuáles podrían pasar y cuáles no, eliminando así la necesidad de señales de tráfico.

Antes del desarrollo de EPORO, Nissan creó la unidad biométrica Car Robot, o BR23C, que imita la curiosa habilidad para evitar colisiones de las abejas. Se trata de un proyecto conjunto con el Centro de Investigación de Ciencia y Tecnología Avanzada en la renombrada Universidad de Tokio.

Inspirado en los ojos compuestos de la abeja, que pueden ver más de 300 grados, el láser LRF (Laser Range Finder) detecta los obstáculos en un radio de 180 grados hasta dos metros de distancia. El BR23C calcula la distancia al obstáculo y, a continuación, envía inmediatamente una señal a un microprocesador, que traduce esta información y mueve o cambia la posición del robot para evitar una colisión.

"En una fracción de segundo detecta un obstáculo", explica Toshiyuki Andou, Director del Laboratorio de Movilidad de Nissan y responsable principal del proyecto, "el robot imitará los movimientos de una abeja y de inmediato cambiará de dirección para evitar un choque".